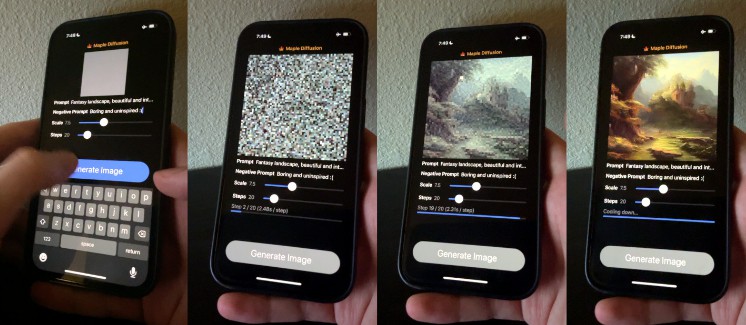

使用 MPSGraph 在 iOS / macOS 上进行原生稳定扩散推理

原生扩散快速套件

Native Diffusion 在 macOS / iOS 设备上本地运行 Stable Diffusion 模型,使用 MPSGraph 框架(不是 Python)。

这是 Maple Diffusion 的 Swift Package Manager 包装器。它添加了映像到映像、Swift 包管理器包以及使用代码的便捷方式,例如合并发布者和 async/await 版本。它还支持从任何本地或远程 URL 下载权重,包括应用程序包本身。

没有就不可能

- @madebyollin谁编写了金属性能着色器图管道

- 编写图像到图像实现@GuiyeC

特征

10 分钟内开始使用

- 极其简单的 API。在一行代码中生成图像。

让它做你想做的事

- 灵活的接口。传入提示、指导比例尺、步骤、种子和图像。

- 从 .ckpt 到 Native Diffusion 自己的内存优化格式的一次性转换脚本

- 支持梦幻亭模型。

专为有趣的编码而构建

- 支持异步/等待、组合发布者和经典回调。

- 针对 SwiftUI 进行了优化,但可用于任何类型的项目,包括命令行、UIKit 或 AppKit

专为最终用户速度和出色的用户体验而打造

- 100%原生。没有Python,没有环境,你的用户不需要先安装任何东西。

- 内置模型下载。将其指向 zip 存档中包含模型文件的网址。该软件包将下载并安装模型供以后使用。

- 与较新 Mac 上的云服务器一样快或更快

允许商业用途

- 麻省理工学院许可(代码)。我们喜欢归因,但这不是法律上需要的。

- 生成的图像在 CreativeML Open RAIL-M 许可下获得许可,这意味着您可以将这些图像用于几乎任何东西,包括商业用途。

用法

One-line diffusion

In its simplest form it’s as simple as one line:

let image = try? await Diffusion.generate(localOrRemote: modelUrl, prompt: "cat astronaut")

You can give it a local or remote URL or both. If remote, the downloaded weights are saved for later.

The single line version is currently limited in terms of parameters.

See for a working example.examples/SingleLineDiffusion

As an observable object

Let’s add some UI. Here’s an entire working image generator app in a single SwiftUI view:

struct ContentView: View {

// 1

@StateObject var sd = Diffusion()

@State var prompt = ""

@State var image : CGImage?

@State var imagePublisher = Diffusion.placeholderPublisher

@State var progress : Double = 0

var anyProgress : Double { sd.loadingProgress < 1 ? sd.loadingProgress : progress }

var body: some View {

VStack {

DiffusionImage(image: $image, progress: $progress)

Spacer()

TextField("Prompt", text: $prompt)

// 3

.onSubmit { self.imagePublisher = sd.generate(prompt: prompt) }

.disabled(!sd.isModelReady)

ProgressView(value: anyProgress)

.opacity(anyProgress == 1 || anyProgress == 0 ? 0 : 1)

}

.task {

// 2

let path = URL(string: "http://localhost:8080/Diffusion.zip")!

try! await sd.prepModels(remoteURL: path)

}

// 4

.onReceive(imagePublisher) { r in

self.image = r.image

self.progress = r.progress

}

.frame(minWidth: 200, minHeight: 200)

}

}

Here’s what it does

- Instantiate a object

Diffusion - Prepare the models, download if needed

- Submit a prompt for generation

- Receive updates during generation

See for a working example.examples/SimpleDiffusion

DiffusionImage

An optional SwiftUI view that is specialized for diffusion:

- Receives drag and drop of an image from e.g. Finder and sends it back to you via a binding (macOS)

- Automatically resizes the image to 512×512 (macOS)

- Lets users drag the image to Finder or other apps (macOS)

- Blurs the internmediate image while generating (macOS and iOS)

Install

Add in the “Swift Package Manager” tab in Xcodehttps://github.com/mortenjust/native-diffusion

Preparing the weights

Native Diffusion splits the weights into a binary format that is different from the typical CKPT format. It uses many small files which it then (optionally) swaps in and out of memory, enabling it to run on both macOS and iOS. You can use the converter script in the package to convert your own CKPT file.

Option 1: Pre-converted Standard Stable Diffusion v1.5

By downloading this zip file, you accept the creative license from StabilityAI.

Download ZIP. Please don’t use this URL in your software.

We’ll get back to what to do with it in a second.

Option 2: Preparing your own fileckpt

If you want to use your own CKPT file (like a Dreambooth fine-tuning), you can convert it into Maple Diffusion format

-

Download a Stable Diffusion model checkpoint to a folder, e.g. (sd-v1-5.ckpt, or some derivation thereof)~/Downloads/sd

-

Setup & install Python with PyTorch, if you haven’t already.

# Grab the converter script

cd ~/Downloads/sd

curl https://raw.githubusercontent.com/mortenjust/maple-diffusion/main/Converter%20Script/maple-convert.py > maple-convert.py

# may need to install conda first https://github.com/conda-forge/miniforge#homebrew

conda deactivate

conda remove -n native-diffusion --all

conda create -n native-diffusion python=3.10

conda activate native-diffusion

pip install torch typing_extensions numpy Pillow requests pytorch_lightning

./native-convert.py ~/Downloads/sd-v1-4.ckpt

The script will create a new folder called . We’ll get back to what to do with it in a second.bins

-

Download a Stable Diffusion model checkpoint to a folder, e.g. (sd-v1-5.ckpt, or some derivation thereof)~/Downloads/sd

-

Setup & install Python with PyTorch, if you haven’t already.

# Grab the converter script

cd ~/Downloads/sd

curl https://raw.githubusercontent.com/mortenjust/maple-diffusion/main/Converter%20Script/maple-convert.py > maple-convert.py

# may need to install conda first https://github.com/conda-forge/miniforge#homebrew

conda deactivate

conda remove -n native-diffusion --all

conda create -n native-diffusion python=3.10

conda activate native-diffusion

pip install torch typing_extensions numpy Pillow requests pytorch_lightning

./native-convert.py ~/Downloads/sd-v1-4.ckpt

The script will create a new folder called . We’ll get back to what to do with it in a second.bins

Download a Stable Diffusion model checkpoint to a folder, e.g. (sd-v1-5.ckpt, or some derivation thereof)~/Downloads/sd

Setup & install Python with PyTorch, if you haven’t already.

# Grab the converter script

cd ~/Downloads/sd

curl https://raw.githubusercontent.com/mortenjust/maple-diffusion/main/Converter%20Script/maple-convert.py > maple-convert.py

# may need to install conda first https://github.com/conda-forge/miniforge#homebrew

conda deactivate

conda remove -n native-diffusion --all

conda create -n native-diffusion python=3.10

conda activate native-diffusion

pip install torch typing_extensions numpy Pillow requests pytorch_lightning

./native-convert.py ~/Downloads/sd-v1-4.ckpt

binsFAQ

Can I use a Dreambooth model?

Yes. Just copy the files from the standard conversion. This repo will include these files in the future. See this issue.alpha*

Does it support image to image prompting?

Yes. Simply pass in an to your when generating.initImageSampleInput

It crashes

You may need to regenerate the model files with the python script in the repo. This happens if you converted your ckpt model file before we added image2image.

Can I contribute? What’s next?

Yes! A rough roadmap:

- Stable Diffusion 2.0: – new larger output images, upscaling, depth-to-image

- Add in-painting and out-painting

- Generate other sizes and aspects than 512×512

- Upscaling

- Dreambooth training on-device

- Tighten up code quality overall. Most is proof of concept.

- Add image-to-image

See Issues for smaller contributions.

If you’re making changes to the MPSGraph part of the codebase, consider making your contributions to the single-file repo and then integrate the changes in the wrapped file in this repo.

How fast is it?

On my MacBook Pro M1 Max, I get ~0.3s/step, which is significantly faster than any Python/PyTorch/Tensorflow installation I’ve tried.

On an iPhone it should take a minute or two.

To attain usable performance without tripping over iOS’s 4GB memory limit, Native Diffusion relies internally on FP16 (NHWC) tensors, operator fusion from MPSGraph, and a truly pitiable degree of swapping models to device storage.

Does it support Stable Diffusion 2.0?

Not yet. Would love some help on this. See above.

I have a question, comment or suggestion

Feel free to post an issue!