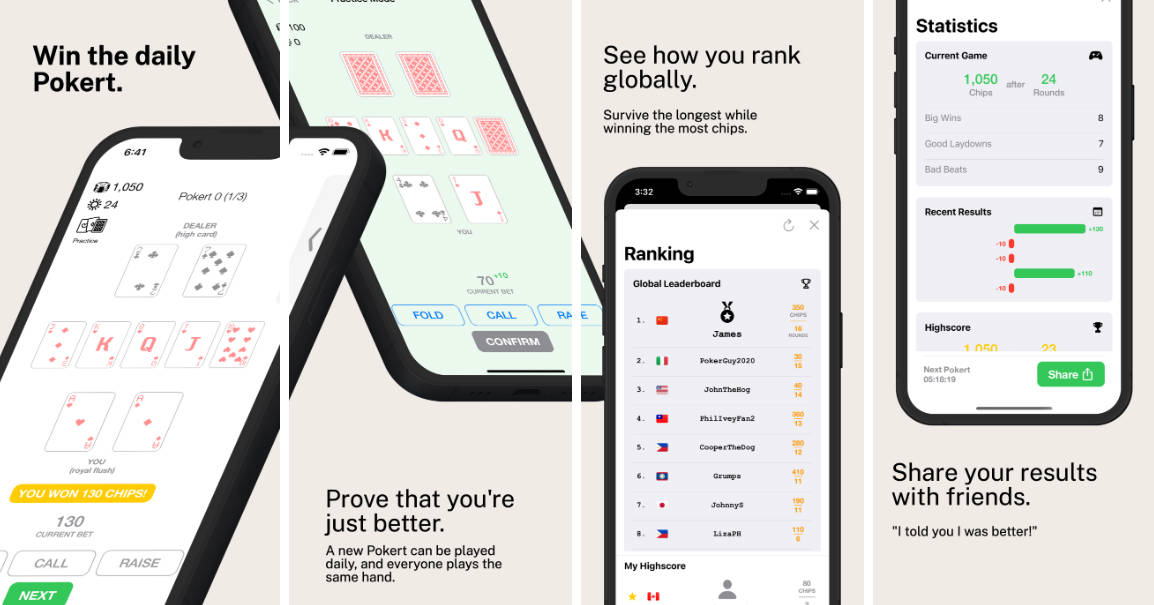

通过 AUSound 隔离实现人声衰减

现在安静

Apple Music Sing利用内置的隔音音频单元。这是一个可配置隔离人声的基本应用程序。

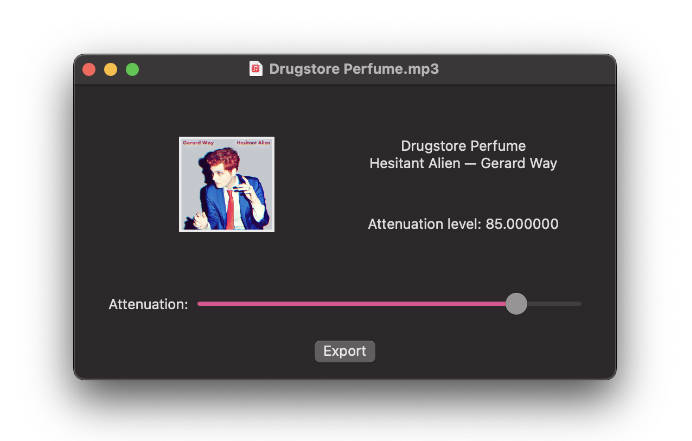

用法

在 macOS、iPadOS 或 iOS 上构建和运行。打开任何音频文件,播放将立即开始。相应地调整滑块,享受!

您还可以导出并保存当前歌曲的 M4A,并应用当前的人声衰减级别。

(我确实说过这是基本的。希望它能作为如何利用音频单元🙂的示例

Vocal Isolation?

Beginning in iOS 16.0 and macOS 13.0, a new audio unit entitled AUSoundIsolation silently appeared. As of writing:

- If you search the unit’s name’s on GitHub, there are 9 results.

- If you search this on Google/Bing/[…], the majority of results are Audacity users complaining about it being incompatible.

(…in other words, another typical unannounced and undocumented addition. Thanks, Apple!)

This unit is silently the backbone of Apple Music Sing. A custom neural network is applied to the isolation audio unit, separating and reducing vocals. This is done entirely on-device, hence Apple’s mention of:

Apple Music Sing is available on iPhone 11 and later and iPhone SE (3rd generation) using iOS 16.2 or later.

This aligns with their second-generation neural engine (present in A13 and above).

Audio Unit Parameters

| Parameter ID | Name | Value | Description |

|---|---|---|---|

| 95782 | UseTuningMode | 1.0 | The default is 1.0, used as a boolean. |

| 95783 | TuningMode | 1.0. | Similarly, this is set to 1.0. |

| 0 | kAUSoundIsolationParam_WetDryMixPercent |

85.0 | The amount to remove vocals by. Should be 0.0 to 100.0 – any value above increases vocal volumes significantly. (Try 1000.0 with your volume set to 1%.) |

Audio Unit Properties

| Property ID | Name | Description |

|---|---|---|

| 7000 | CoreAudioReporterTimePeriod | ? |

| 30000 | NeuralNetPlistPathOverride | The path to load the property list named for the neural network.aufx-nnet-appl.plist |

| 40000 | NeuralNetModelNetPathBaseOverride | The directory to load weights and so forth from. If not specificed, the within its property list is utilized.ModelNetPathBase |

| 50000 | DeverbPresetPathOverride | ? |

| 60000 | DenoisePresetPath | ? |

(If you’re a lone soul frantically searching for what these are in the near future, pull requests with their description would be much appreciated.)

MediaPlaybackCore.framework (providing this functionality) appears to only set “NeuralNetModelNetPathBase” and “NeuralNetModelNetPathBaseOverride”, and by default sets “DereverbPresetPathOverride” to null (thus disabling it).

Despite how Apple Music applies it, lyrics (whether timed, or timed by word) are not a factor whatsoever in the model. This is especially apparent if you listen to any song where vocals are distorted, or background instrumentals drown out vocals. I imagine Apple simply does not provide the option for non-timed songs because karaoke wouldn’t be nearly as fun.